Cisco MDS Directors: top five architectural advantages

The Cisco MDS Director class Fibre Channel (FC) switches have several unique features that are purpose built for switching FC based storage protocols. In this post I will touch upon some of the architectural aspects of the MDS so that the differentiation it brings to the table is clear. In some ways you can also consider this post as a short guide to internal workings of MDS directors.

Before delving any deeper, lets first understand the main components of an MDS director and also level set on some of the common terminology used in this context:

1. Chassis: The outer skeletal frame that holds the switch together. It comes with a standard set of peripherals like power supplies, fans etc.

2. Ports or Interfaces: The FC ports through which FC frames enter (ingress) and leave (egress) the switch. A modular Line card (LC) hosts these ports and is inserted into designated slots in the chassis.

3. Supervisor: The brain of the switch that supervises all the switch operations. It also runs various FC software protocols required for its functioning. An important component of the Supervisor is an Arbiter that decides which frame(s) on which ingress/egress port should be scheduled for processing next. There are two modular supervisors per switch for redundancy.

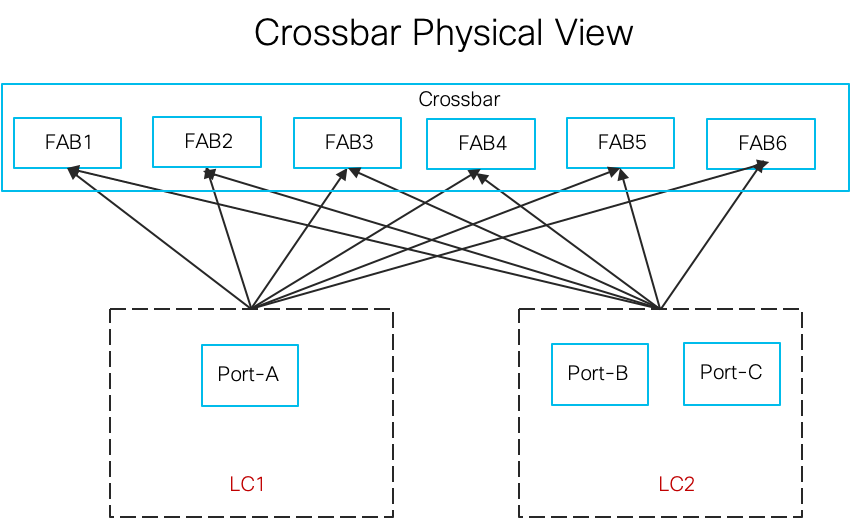

4. Crossbar: A function within the switch that helps interconnect any ingress ports to any egress port for the purpose of frame switching. The crossbar on the MDS consists of upto 6 modular fabric modules.

5. Buffers and Queues: Due to delays inherent in a switching function, some amount of buffering/queueing is associated at various stages within the switch to hold frames till they are processed and switched out.

6. Switching features: The main logic of a switching function. The most fundamental among this is the forwarding function that looks at the FC D_ID (DestinationID) field in every FC frame header arriving at an ingress port and decides which egress port to forward it. There are several other switching features like Hard Zoning that decides if frames has to be allowed/denied etc.

The above functions are implemented using hardware based on custom Cisco ASICs and NX-OS software. The below diagram shows the main hardware components of the switch.

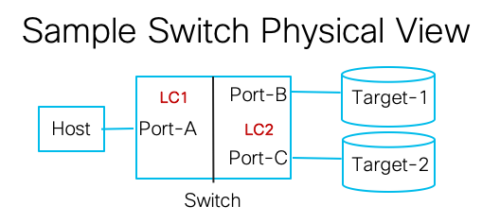

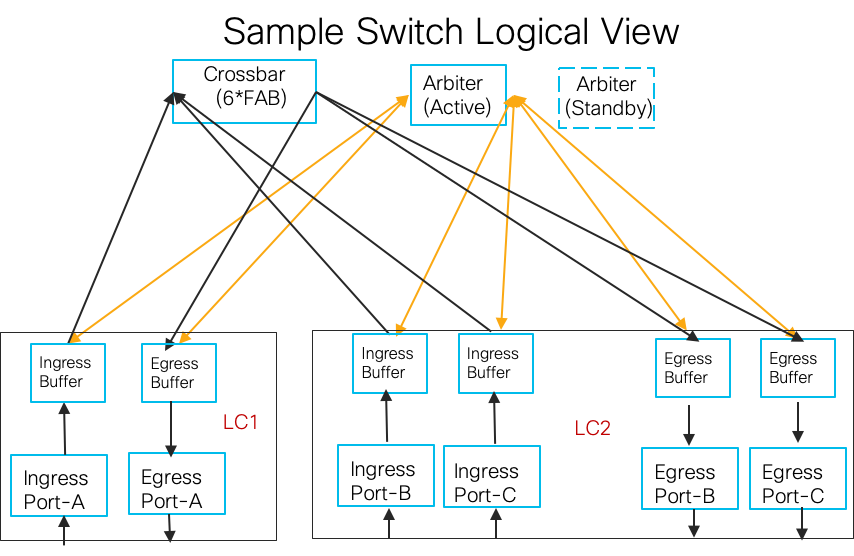

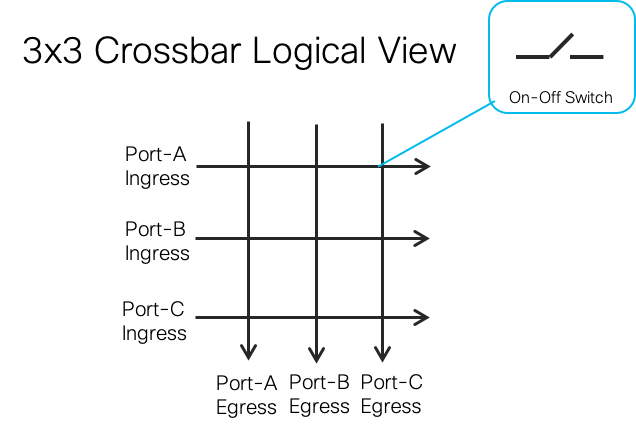

For the remaining of this post let us consider a simplistic 3 port sample MDS switch and its logical functional view with the connections as shown below.

A three line summary of the switch operation is as follows: Frames enter the switch via ingress ports and a forwarding decision is made based on frame DID to pick an egress port. The ingress port makes a request to the arbiter to check if the frame can be transferred to that egress port. Once the Arbiter grants the request, the frame is sent via the crossbar to the egress port and out of the switch.

Now having covered the basics, let’s look at five important architectural aspects of the Cisco MDS directors that puts them in a pole position with clear differentiation and value to our customers. I am assuming here you have general awareness about the workings of FibreChannel.

1) Elimination of Head of line Blocking:

Let us start with an analogy. At a traffic junction, the right turn lane (or left – depending on which side of the world you live in) can be taken even when the traffic light is red. Now imagine that there is a car at the beginning of the free turn right lane that wants to go straight. Every car behind it has to wait till the traffic light turns green. Now replace cars with frames and right lane with ports, this is exactly what can happen inside switches also and is called head of line (HoL) blocking.

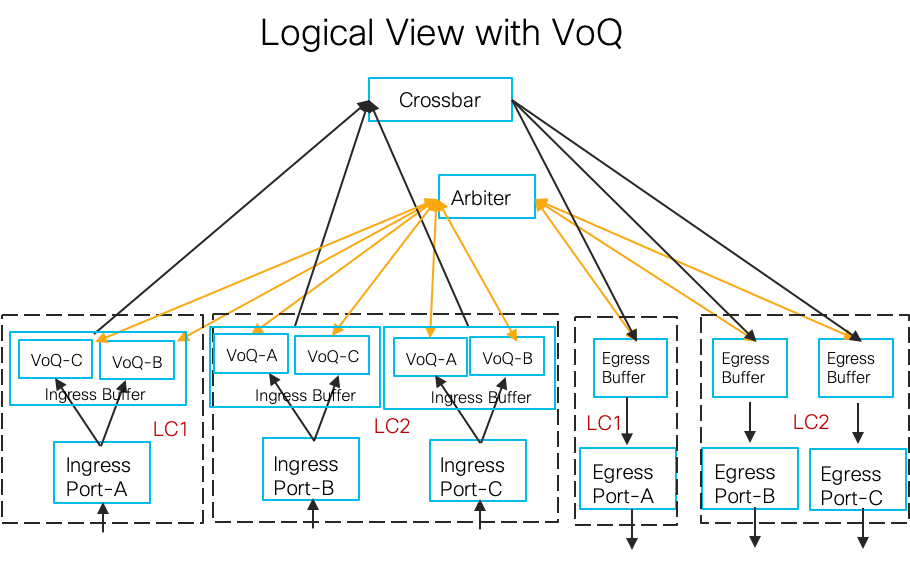

The MDS switches avoid HoL blocking by using a technique called Virtual Output Queueing (VoQ). This means at every ingress port, logical queues are carved out from the ingress port buffers for every possible egress port as shown in diagram below. This way a given frame is only blocked in the switch by another frame destined to the same egress port. So in our sample 3 port switch, every ingress port will have 2 VoQs, (excluding itself) for a total of (2*3)=6 VoQs in the switch. Note that the actual MDS switch could have upto 4 VoQs per ingress/egress port combination and a upto 4K VoQs per ingress port. But in our sample switch we consider only one VoQ per ingress/egress pair for simplicity.

Consider LC1, where the ingress port-A has one frame to send to port-C and and one frame to port-B. Suppose port-C is congested that causes buffer exhaustion on egress of port-C and cannot take any more frames from any ingress port. In this situation:

- Without VoQs, the A->C frame at the head of the ingress queue of port-A would block the frame from A->B. The entire ingress port-A would continue to be blocked till the congestion at port-C cleared.

- With separate VoQs carved out for port-B and port-C at port-A, the A->B frame can go through, and only the A->C frame has to wait till congestion at C is cleared.

Going back to the traffic light analogy, it is like carving out sub-lanes in the right most lane – one for strictly taking right and another for going straight. With this, cars can always take the right turn unless the lane after the right turn is blocked.

VoQs offer best utilisation of the switch resources under most conditions without allowing a few bad actors to jeopardise the entire switch operations. This behaviour is especially important for FC switches that are used to build “no-drop” fabrics while maintaining required level of packet switching performance.

2) Central Arbitration

FC is designed to transport storage protocols (SCSI, NVMe) and these protocols are averse to frame drops. The host storage stacks are designed with the assumption that the transport medium is lossless. Any frame drop in the fabric will result in hosts pausing I/Os and attempting error recovery for extended periods with an eventual drop in performance.

The “no-drop” behaviour is achieved on FC links by using B2B crediting. I shall not get into the details of B2B crediting in order to not deviate from the topic. In short, B2B crediting ensures that every transmitting FC port sends out a frame on the link only if it is assured that the receiving peer port has enough buffer to receive and store the frame.

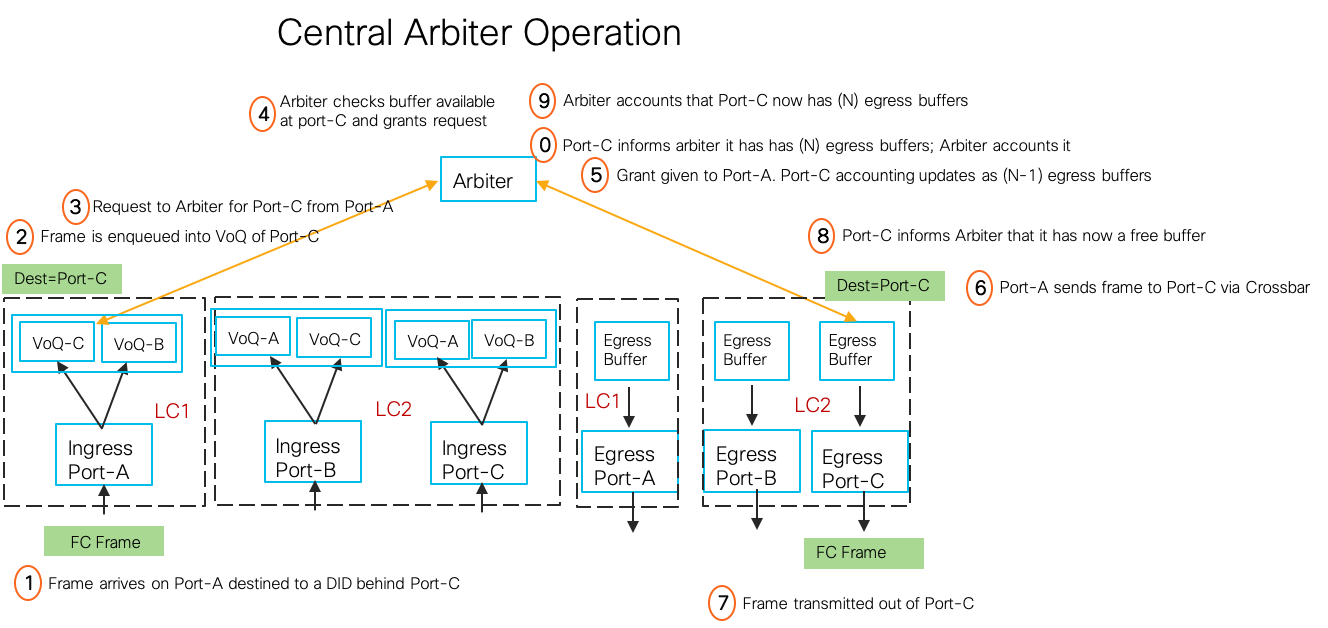

But what happens inside of the switches when frames are switched from one FC port to another? The MDS switch uses a crediting logic inside the switches to ensure there are no frames dropped due to lack of resources. The key is to run this crediting end-to-end i.e from ingress port to an egress port (which could be on any LC of the switch) covering all the paths inside the switch. This is achieved by a central arbiter logic in the switch that keeps accounting of buffers available at every egress port. An ingress port wanting to send a frame to an egress port first asks the arbiter if there are enough credits on the egress port. Only if the arbiter grants the request can the ingress port send a frame destined to the egress port. This way the no-drop semantic is preserved within the switch also. Note that every single frame switched has to involve the central arbiter. The arbiter operation for our sample switch is shown below .

Without the central arbiter, frames would have to be sent from ingress to egress ports without knowledge of whether there are buffers available at the egress port to hold frames. This blindfolded approach can result in unexpected frame drops inside switches. Imagine what this means: The ports took all the trouble of doing B2B crediting on external links to ensure no frames are dropped, but the switch ended dropping frame inside of it – Totally unacceptable!

Central arbitration ensures frames are not dropped inside the switches under most conditions. Imagine the case where egress port-C is congested and ingress port-A that has a frame to be sent to port-C. The port-A arbitration request will be put on hold and it would continue hold the frame and also hold back the B2B crediting with its link peer. There will be a ripple effect that will back pressure the link peer and all the way till the traffic source forcing it to slow down. The moment buffers free up at port-C, arbiter grants the pending requests and packet starts flowing again from port-A. This would also kickstart the external B2B crediting and traffic from the source would start flowing again. Note that during this whole congestion scenario no frames were dropped, so no host based error recovery actions got triggered.

3) Consistent low latency with a non-blocking crossbar

The MDS director uses specialised crossbar ASICs in the fabric modules that forms the core of the switching functionality. The crossbar can be thought of like (n*n) mesh connection connecting ingress port to egress ports with a “On-Off switch” at every crosspoint. The physical and logical views are as shown in the diagram below.

Crossbars are very efficient for switching because they can close several crosspoints at the same time allowing transfer of frames between multiple ports simultaneously. The performance of crossbars is further boosted by adding multiple of these in parallel. The MDS directors can provide for upto six fabric modules in the crossbar complex as shown in the above physical view. A set of intelligent algorithms spread out the traffic from ingress LCs across the six fabric modules based on real time traffic load on each. The egress LCs can receive traffic from any of the six fabric modules and can reorder (if required) to preserve the original frame order. The modular fabric modules can be replaced or upgraded non-disruptively.

Crossbars are very efficient for switching because they can close several crosspoints at the same time allowing transfer of frames between multiple ports simultaneously. The performance of crossbars is further boosted by adding multiple of these in parallel. The MDS directors can provide for upto six fabric modules in the crossbar complex as shown in the above physical view. A set of intelligent algorithms spread out the traffic from ingress LCs across the six fabric modules based on real time traffic load on each. The egress LCs can receive traffic from any of the six fabric modules and can reorder (if required) to preserve the original frame order. The modular fabric modules can be replaced or upgraded non-disruptively.

The MDS crossbar crosspoint links runs at 3 times the speed of the LC to crossbar connecting links. This means it can transfer thrice the number of frames from each ingress port to egress port in unit time. This means there is zero probability of blocking inside the crossbar, making it a fully non-blocking and no-drop crossbar. The speedup also results in a constant delay for each frame through the switch and allows departure of frames to be precisely scheduled, resulting in consistent latency for frames within the switch.

Consistent low latency is very important for storage protocol performance. Host applications perform better when I/O operations complete in a time bound fashion. Generally, the host volumes are provisioned on storage arrays using a performance (latency) based tiering system with strict SLA requirements. The FC switches and fabrics that can deliver consistent latency ensure that storage SLAs are not compromised due to random delays in fabric. The MDS crossbar is designed to ensure this.

4) Source fairness

When several ingress ports are requesting arbiter for switching the frames that they receive, there is a possibility of an unfair situation. Consider a case where two ingress ports A,B are sending frames to one egress port-C. Suppose at some point, ingress port-A has 100 frames to send to port-C and port-B has only 1 frame to send to port-C. If port-A happens to do the 100 arbiter requests first, there will be a burst of 100 frames from port A->C before the one frame could be sent from port B->C. Additionally, the frame from port-B will experience a lot of latency. This is unfair to port-B.

This unfairness is avoided on the MDS by allowing an ingress port to have a limited number of outstanding arbiter requests for any output port. This means that even if port-A has 100 frames and port-B has only one, the port-B request will be considered by the arbiter on par with port-A. This results in a much more fair switching system.

Source fairness ensures that ports that are very chatty do not take undue advantage of the no-drop behaviour inside the switch. Every port is treated equally irrespective of how much traffic it has to send. All ports experience uniform latency. This is especially useful in circumstances where applications with different I/O traffic profiles are sharing the same switch port. For eg: A short OLTP transaction is not starved out by a voluminous backup operation.

[Note: The QoS feature of MDS can be used to give preferential treatment to certain ports based on a user configured policy. I have omitted the detail of how QoS interacts with source fairness to keep it simple]

5) In-order delivery

In-order delivery of frames in an FC Exchange is a desirable feature from a FC standards compliance perspective. This eliminates the need for exchange reassembly at a recipient end device if frames are delivered out of order. Though most of todays end-devices are capable of performing the reassembly, its frequent invocation can result in suboptimal performance. Moreover, FICON mainframe environments mandate an in-order delivery of frames all the time. So switches and fabrics are expected to preserve frame order in an exchange.

The MDS forwarding ASICs have advanced algorithms that ensure ordered delivery of frames under all circumstances including dynamic and unexpected fabric topology changes.

To summarise, we looked at five important characteristics of MDS Directors viz.

- No Head of Line blocking of frames inside the switch for efficient use of switch resources

- Central Arbitration to ensure frames are never dropped inside of switches

- Consistent Low Latency to ensure application I/O performance is never compromised

- Source Fairness to ensure all ports are given a proportionate share of switch resources

- In-Order frame delivery to ensure end-devices don’t unnecessarily spend cycles reassembling frames of I/Os

Note: Even though this post was specifically on the MDS director, all the above features and their benefits are available on the MDS fabric switches also, albeit built on a slightly different architecture.

These characteristics of MDS switches make it an ideal choice to build a high performance FC SAN fabric. The MDS fabric can meet the highest levels of application I/O performance requirements and satisfies needs of all types of storage protocols from SCSI to NVMe to FICON.

If you are in a position where you are deciding on a FC fabric for your mission critical SAN infrastructure, let the above considerations drive your decision. Find out if your switch vendor can meet all these requirements.

Comments or Questions? You know what to do!

[PS: A note of thanks to colleagues Fausto Vaninetti and Paresh Gupta for help in reviewing.]

Tags:- Arbiter

- Cisco MDS Architecture

- Consistent Low Latency

- Crossbar

- FC-SAN

- HoL

- MDS advantages

- MDS director

- Switch architecture

- VoQ