In the early days of Application Centric Infrastructure (ACI), the policy domain was limited to a single datacenter using ACI single-pod fabric. Policy operations were straightforward since one Intent correlated to – one enforcement layer with own virtual transport layer. What happened later, is the challenge that ACI faced when extending the policy support across heterogeneous logical domains and different infrastructure layers.

If you missed some stops of our journey, feel free to go back in time and read more on the transition towards policy model in the first part of this blog series. If you are curious on why are we using policies in the contemporary Intent-driven datacenter, press the button to station number 2 and 3 respectively. Afterwards, come back to the future for a drive through the era of policy consolidation and whiteness the way ACI has addressed this crucial challenge.

Policy-based software became infrastructure-aware

From a user perspective, it was obvious that the ACI evolution process should not stay bound to a single datacenter. Instead, the idea was to make the existing policy-based software become infrastructure-aware, and bound to a detailed network statement. This implied unifying the policy stacks under a common umbrella, in order to address the need of the actual services that are having workloads running in hybrid hosting platforms.

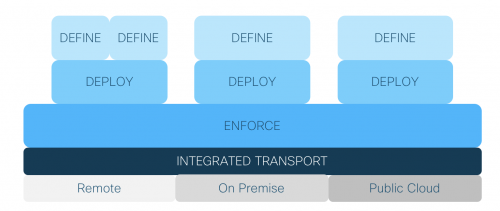

The ACI team urged to deliver a common transport layer for policy enforcement and facilitate a policy unification over different domains (on premise, remote, public). Thy wanted to avoid the formation of “policy islands” as individual solutions that only work for specific domain and provider – e.g. Policy for VMware, Hyper-V/WAP/AzureStack, Kubernetes, Bare Metal, etc. Figure 1 depicts the concept of a “Policy Connectivity Broker” – a generic abstraction layer for aggregating various policies on the top of heterogeneous physical locations.

Figure 1: Policy harmonization over common enforcement and transport layer

ACI Anywhere is the leading-edge initiative to spread the application Intent globally in a way that it allows to define policies for any workload, and ensures its integrated enforcement and secure forwarding – anywhere. With a smooth transition, ACI leads you grow your on-premise infrastructure to stretched and multi-site datacenter solutions and supports the extension of your services to the public cloud. ACI provides a whitelist model that enhances security on different levels based on fine-granular micro-segmentation. Another advantage coming from the policy harmonization is the workload mobility at a scale. This allows to deploy, scale, and migrate applications seamlessly across multiple hybrid data centers. A different type of policies permit deep dive and thorough service analytic in order to predict anomalies, adverse network behavior and eliminate threats.

Overall, ACI Anywhere approach sets the stone for network programmability and automated control of multi-purpose networks and network function virtualization use-cases. Let’s observe in continuation the ACI Anywhere deployment models and their specifics.

ACI Datacenter Interconnect

Having the option to deploy multiple APIC controllers placed in different locations, makes it more redundant and consequently more reliable approach to manage the network. This is provided based on technology for extending ACI cluster to other locations using deployment types such as: Stretched, Multi-Pod, Multi-Site, Remote Physical Leaf, and Remote Virtual Leaf (vPod). The first tree are aimed to meet different customers’ needs for datacenter extension in a specialized way, by creating single or multi-region policy management domain. This objective is to provide various levels of multi-tenancy, redundancy, scalability and security of the API deployments. The last two models extend the fabric (Availability Zone) to a remote location that is associated to the local Pod, and allow the inclusion of virtual spines and leafs in the solution. Some of the use-cases for virtual remote pod includes: extending ACI to bare-metal clouds, providing direct connection between local and remote collocation centers (e.g. Equinix, CoreSite), support of brownfield deployments using lightweight remote datacenter, and mini datacenters deployed at Edge locations for faster access to specific services.

ACI branch to WAN and Campus

This deployment allows to define a policy model suitable for integrating datacenter with WAN and Campus and providing their full end-to-end connectivity. Another example involves interconnecting new ACI-based deployments with traditional on-premise networks in a greenfield-brownfield style. Such approach extends the application networking portfolio to support richer set of SLAs, security models, QoS policies, and provide Load Balancing service for various applications. Moreover, a precise definition of network profile for “User” and “Things” is included, which expands the previous policy specification with additional features such as: Device, Location, and Role.

ACI and Container integration

This implementation addresses the wide adoption of container services and it is guided by the mission of Cisco to support open source tools and management APIs for the deployment of policies between ACI and container platforms. With the aim to reinforce the microservice mission and cloud-native applications, Cisco is cooperating with: Google to provide Kubernetes integration with ACI, RedHat for OpenShift and OpenStack support, and Cloud Foundry as different platforms that can generate policies in ACI. OpFlex and OVS support is provided in each of the nodes, including APIC live metrics per container and complete visibility via health metrics.

ACI Virtual Edge – AVE

This is the evolution from the Application Virtual Switch (AVS) for ACI environments. AVE was designed to provide consistent policy control across multiple hypervisors such as: Microsoft Hyper-V, KVM, and VMware vSphere. The role of AVE involves the support of hypervisor-independent APIs for network virtualization and microsegmentation, with the aim on preserving the existing operational models.

ACI and public cloud

This implementation represents the multi-cloud part of the ACI Anywhere portfolio. Extending on premise ACI to the public cloud sites such as, Amazon AWS and Microsoft AzureStack, comes with a single point of orchestration that makes a smooth policy translation between ACI and AWS-specific policy constructs. This achieves a common governance entity between private and public cloud deployments and guarantees operational consistency. Such policy model provides to the applications that are running on AWS cloud, a direct policy discovery and visibility of their ACI-specific counterparts, granting to the APIC – a fine-grained control over the entire ecosystem. Streaming telemetry for monitoring and troubleshooting, are among the value-added tools and features that elevate the cluster’s performance to a full potential.

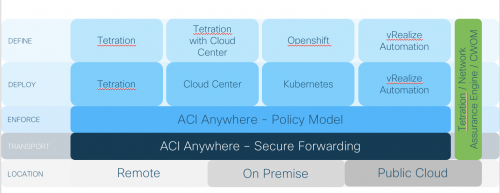

Figure 2: Policy Connectivity Broker and per-level application example

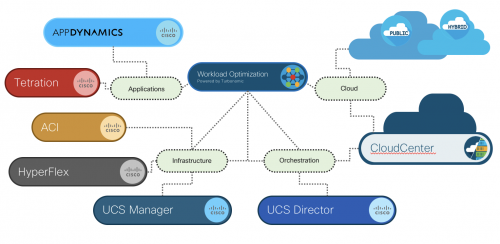

Cisco offers an extensive portfolio of applications and multitude of hardware towards policy-based framework that underpin the aforementioned ACI deployments into policy harmonization framework, Figure 2. These applications provide full policy lifecycle management: from deployment – adhering to analysis, monitoring and optimization cycles – to decommissioning. Figure 3 depicts some of the products in ACI Anywhere ecosystem and the respective relations.

Figure 3: Cisco Multi-cloud portfolio and building blocks of the ACI Anywhere ecosystem

Following is the summary of the specific domain and the representative products, along with the featured blog posts providing extensive and deep-dive information on the specific solution:

- Application and network visibility: AppDynamics [1, 2], Tetration [3, 4, 5]

- Infrastructure: ACI [6], HyperFlex [7], UCS Manager [8]

- Orchestration: USC Director, CloudCenter [9]

- Decision automation: Workload Optimization Manager [10]

- Network and Intent assurance: Network Assurance Engine [11, 12]

To conclude, applying policy-based approach to your datacenter with the option to extend the same model to any workloads on any hypervisor, in any cloud – is a cornerstone for modern infrastructure and applications. This grants improved visibility and harmonizes all your security and QoS policies into a common deployment model, managed by a single orchestration entity. The achievements of embracing a State of the Art technology into your datacenter revolve around: real-time full visibility, network assurance, agile and automatic deployments, security protection, reduced OPEX and time to market footprint, among the others. We get consistent workloads that fully reflect the application Intent and map it to an accurate network design. This approach allows to make fast technology adoption and accelerate your projects with a better success rate.

Having said this, the term “Policy harmonization” boils down to ACI Anywhere, because we like stressing technology hypes. Indeed, it was testified that introducing a buzz is a good way to reshape thinking, and help you and your teammates initiate planning and convince your leadership that substantial actions had due date yesterday!

And before we say goodbye, let’s reflect on our full journey that has been split into four sections. The first one scoped the technology timeline as a foundation for modern datacenter architectures. We discussed how the expansion of heterogeneous applications triggered important refactoring of the networks, the protocols and the datacenter design, with the aim to address customer-specific use-cases. The network became open, agile and programmable. We elaborated later on the policy concept as a fundament behind the novel Intent driven networking, particularly responsible for the support of programmable networks and ACI extensions for applications that run everywhere. We described the different ACI deployment models, and listed some specialized products and technologies from the Cisco portfolio that help you build your prospective future infrastructures. And this is the synopsis of the ACI Anywhere mission. We finalize here our time-machine travel, letting ourselves some quality time in the current era of policy harmonization.