In the previous blog, I described how the modern, custom-built application that span over multiple heterogeneous environments overtook the main data center business. The cloud and IoT disruption broke the old monolith applications and urged the need for highly available, elastic resources at a scale. A new business opportunity was opened – ensure seamless and secure IT services over distributed cloud architectures. This is how the Developer became the new Customer.

Now, how did we live in the times when the cloud workload protection was an unknown?

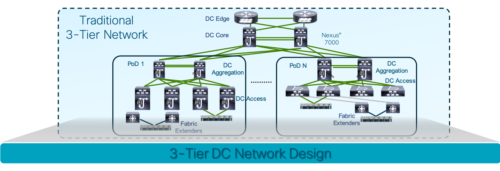

Remember the “millennial” data center? This traditional 3-tier model was the de-facto architecture when we wanted to inspect every single packet on the perimeter and the latency and throughput were not of a big concern. A solid model that served its purpose during the times when the amount of data and applications in the DC came down to north-south communication. Today, however, the network is even more critical to delivering applications than years ago.

So what’s the cost to acquire firewalls and configure VLANs to serve versatile applications, and billions of IoT objects that are generating 2 Exabytes of data daily?

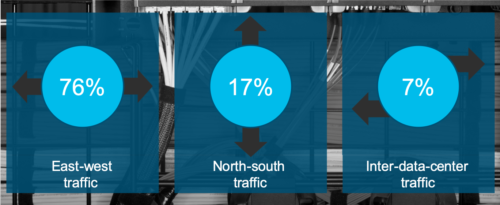

The birth of the 2-tier Spine-Leaf “Gen Z” data center tried to reduce that cost by optimizing the fabric design – keeping the stateful firewall inspection on the DC perimeter and allowing a fine-granular East-West traffic segmentation. The new way of segmentation proved powerful in isolating the lateral malicious traffic that already penetrated the data center. It also confirmed that the legacy FW protection fails insufficient in such case. Do you come from the SDN era or you are fed up of legacy? Great, then you can embrace segmentation via East-West firewall context, optimize the investment, and still keep the security wall intact – a security on demand.

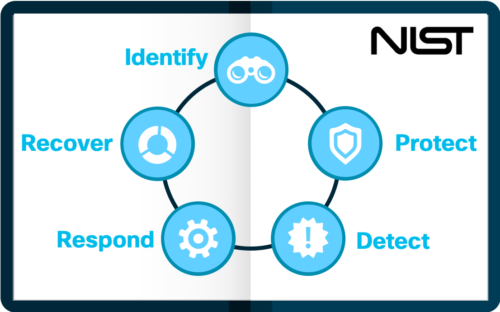

With the application being the role model in the multicloud world, the legacy security shifts away from the network alone. Your duty is to ensure the security model follows the application, regardless of the workload functionality and placement. You wonder why the traditional solutions cannot help here? To answer this, let’s see how the workload protection method works in a case of security attack. Remember some of the famous security attacks that have made the headlines, for e.g. the WannaCry ransomware attack? If we split the process according to the timeline, then it boils down to the following workflow:

Before the attack: We want to recognize the current communication relationships of the applications and validate them with our playbook. If they match, then we need to enforce security policy and let the system take care of blocking the communication. Furthermore, we want to harden the policy by blocking SMB ports to all external hosts that do not require file sharing.

During the attack: Suppose the attack still comes through because the traffic somehow escaped the policy. Then you want to block the attack with another policy enforcement or remove compromised source of attack from the network.

After the attack: You want to determine the damage of the attack by performing flow search. You also want to contain the attack and identify compromised sources. And finally, what you should do is to enforce fine-tuned policy and block further spread of the same attack.

Seems easy, right? And now, going back to the question, try and find the analogy of implementing this in a legacy way.

The way you would tackle this traditionally is by gathering the data from 100 Billion Wireshark Events collected during 3 Months and saving it within several excel documents. Having achieved some visibility, then you will apply blacklist model (list of all the known malware) like most of the security software products on the market. The problem with such methodology is that malware is always morphing and adapting, making it no longer detectable to the traditional blacklist. If instead of searching all the bad actors, we focus on the good actions, the probability to prevent zero-day attacks and minimize lateral movement in case of security incidents raises. This is the principle of the white list security model – independent on any malware code changes, if the threat is not whitelisted, it wouldn’t work.

Designing however a well-founded whitelist model is not given – you need to dominate the traffic visibility in order to project an accurate application whitelisting. This requires a good understanding of all known communication and dependencies that are required for the application to work in order to mitigate deviations. And this process is iterative, by optimizing and adapting the policies as the application changes.

To back up the previously said, we oftentimes consult the Gartner reports along with the recommendations of the security institutions like for e.g: NSA, US National vulnerability database, NIST Cybersecurity Framework, and the Australian Cyber Security Center of their Government. They all agree on the same: whitelist segmentation is crucial model to ensure security. You need procedures and methodology to understand what’s really going on in the data center. Bring your own devices, IoT, the cloud, application templates, code reuse, patches – they are all part of your risk and yet another security gap in your DC. Therefore protecting them is part of your liability.

So… why do we need to do things differently?

Because it is impossible to blacklist all possible threats out there in the cloud. A firewall with an exhaustive list of blocked applications never gets the chance to act when the usage of the app is remote or mobile. Similarly, anti-virus (AV) software is struggling to cope with today’s threats because they cannot keep up with the security threats being updated on a daily base.

The classification of different domains within the workload protection field (visibility, segmentation, forensics etc.) defines three buying centers as shown on the picture above: the network, the virtualization and the CISO team. The team roles imposed by the new model also change creating new organizational challenges for increased cooperation and interlock of duties between the different teams.

And, finally, there are operational challenges that we have to consider. Visibility, cost of security, compliance and maintenance are in counter-balance with operational efficiency and complexity. So, one of the questions is e.g. how to find a right balance between agility and security. With at least 50% revenue generated predominantly by software and services, is it worth investing? Many companies have no visibility model for their applications. The reality is that security and visibility are tightly coupled and need to be balanced at certain cost point. But, for the beginning, it is definitely worth to start by painting the big picture and highlighting its small details.

In the next post, I will talk about the recipe for application-tailored security and discuss the common approaches of the existing solutions. Stay tuned!