This is one of the worst calls that a Network Engineer wants to receive. Personally I got it many years ago when a Tier 1 Service Provider lost all the connectivity in a major city. I spent 48 hours in the Network Operation Center of that Service Provider trying to find what was the root cause that created all that chaos and guessing what was the network configuration before the issue.

Nowadays, where moving from the lab to production happens too fast, where the irruption of the Cloud changed the way that the enterprises design their IT services, where everything should be “agile” and the applications have the possibility to interact and even modify the network behaviour (at least this is what we are expecting thanks to the Software Defined Networking), just now, it is a good time to consider what we can do to minimise the possibility of another outage.

There are plenty of things that could go wrong on a production network, starting with bugs on the routers and switches and going up to the human errors. For that reason it is quite important to define clear processes and automate as much as possible all the tasks that involve interaction with the network avoiding fat finger issues.

In an ideal world, we would be able to improve every single area in one shot, however in the real world, Service Providers cannot afford major changes in their processes and systems all together, so with that in mind, if I would have to pick one element that I would harden, I would pick the device configuration domain.

Why? Easy, the majority of the day-by-day issues (please do not consider physical or OS bugs, we can only plan high availability solutions and pray to do not have to use them) involve configuration changes, either by a provisioning systems or by network engineers that access directly to the network devices. At this point someone could tell me that using AAA should mitigate the second one. Agree, but if it is implemented properly, but unfortunately this is not the case in all the networks.

Why? Easy, the majority of the day-by-day issues (please do not consider physical or OS bugs, we can only plan high availability solutions and pray to do not have to use them) involve configuration changes, either by a provisioning systems or by network engineers that access directly to the network devices. At this point someone could tell me that using AAA should mitigate the second one. Agree, but if it is implemented properly, but unfortunately this is not the case in all the networks.

Now that I have a target it is time to decide my approach. I could use one of the many SDN controllers that are available out there and use it like my central point of access to the network. This should reduce the number of applications that interact with the network, as they can use the controller as a mediation point and therefore the network elements would be under less stress as less applications and users would be accessing to them simultaneously.

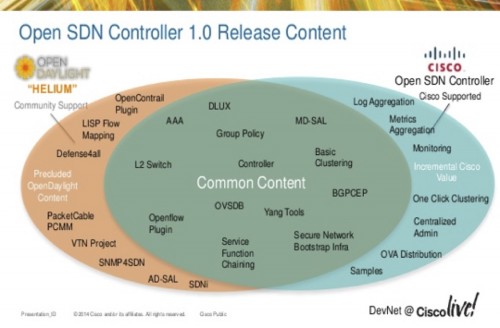

Following with the controller, my first question should be, Open or Commercial? If I am happy to work with a community of developers, getting benefice of the community advances and also understanding that sometimes I will have to solve my issues with the controller by myself, then I could go with something like OpenDaylight.

Of course I can also choose a commercial version such us the Open SDN Controller and simplify my life with the installation and support.

In any case, on top of the controller I will need to have some application that have all the service provisioning logic and that instead to go directly to the network devices, it will use the controller as an abstraction layer. This would simplify the service definition, as now the service can be defined without take into consideration the devices in which it will be running (allow me a small smile here, we all know that at the end the network devices will put constrains to the way that the services are designed, but let’s keep dreaming). The controller, should manage to access to the devices using the most appropriate southbound interface, worst case it will be CLI, best case an API or a standard protocol such us NetConf.

We could use the controller for diagnostic and troubleshooting, just need to create applications that use the NBI to send and retrieve information.

If we do not feel so confortable with the SDN approach then we can opt for a more traditional solution based in a not traditional orchestrator.

Network Service Orchestrator (NSO) that previously was known as Tail-f NCS, it is an orchestrator designed to cover the traditional network scenarios and the new ones that have just popped up with the introduction of Network Function Virtualization (NFV). What makes this application so different from the traditional provisioning systems? The main difference in the approach is that services are defined in data models, YANG models to be more specific, rather than hard-coding in software. NSO then uses these data models to automatically generate the corresponding user interfaces, north-bound APIs, database schemas, and south-bound command sequences. So, what you see it is what you get in the NBI too. End of the never-ending story of the API gaps!

Network Service Orchestrator (NSO) that previously was known as Tail-f NCS, it is an orchestrator designed to cover the traditional network scenarios and the new ones that have just popped up with the introduction of Network Function Virtualization (NFV). What makes this application so different from the traditional provisioning systems? The main difference in the approach is that services are defined in data models, YANG models to be more specific, rather than hard-coding in software. NSO then uses these data models to automatically generate the corresponding user interfaces, north-bound APIs, database schemas, and south-bound command sequences. So, what you see it is what you get in the NBI too. End of the never-ending story of the API gaps!

So the idea behind this approach is that the there is no need for long development cycles to get a new service on production or to modify it. Every step in the service automation is explicitly and declaratively defined in a way that can be performed by network engineers and therefore no need for coding, just model definition!

Other great benefice is that support for new devices is achievable in less than two weeks for the majority of them, so introduction of new network elements or complete vendor swapping will not find a showstopper in the provisioning system as the service definition and the service implementation has been really decoupled.

And to close, one benefice that I would like to have in the past, it is transactional. What does it mean? Think about a transaction like a snapshot in a virtual machine. If something goes wrong on the network after some change then I can go back to a specific point of time where the network was ok and I can do it for the whole network in one shot!

As a side note, the network engineers can use NSO as a CLI proxy, executing on the server CLI that will be send to the network devices. In this way all the commands will be logged and will benefice from the transactional functionality. If I just shut down an interface using NSO CLI and start to get shouts from the other guys in the room, just execute “rollback configuration” and nothing happens.

Anyway, we can forget about any kind of benefices that we can get from network abstraction, service models or transactional tasks, if we do not implement strict processes. Direct access to the network should be controlled and logged, no to finger pointing but to maintain the network as secure and stable as possible and in case of issues be able to answer the classical questions, who, what and when, and get back to normal state as soon as possible.