8 reasons why you need fluid tech for data analytics success

The harnessing of data through analytics is key to staying competitive and relevant in the age of connected computing and the data economy.

Analytics now combines statistics, artificial intelligence, machine learning, deep learning and data processing in order to extract valuable information and insights from the data flowing through your business.

Your ability to harness analytics defines how well you know your business, your customers, and your partners – and how quickly you understand them.

But it’s still hard to gain valuable insights from data. Collectively the challenges are known as the ‘5 Vs of big data’:

- The volume of data has grown so much that traditional relational database management software running on monolithic servers is incapable of processing it.

- The variety of data has also increased. There are many more sources of data and many more different types.

- Velocity describes how fast the data is coming in. It has to be processed, often in real time, and stored in huge volume.

- Veracity of data refers to how much you can trust it. Traditional structured data (i.e. in fixed fields or formats) goes through a validation process. This approach does not work with unstructured (i.e. raw) data.

- Deriving value from the data is hard due to the above.

If you’re wrestling with the 5 Vs, chances are you’ll be heading to ExCeL London for the annual Strata Data Conference on 22-24 May 2018.

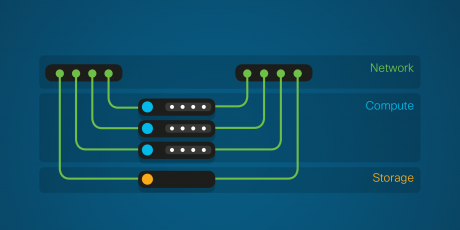

We’ll be there on Booth 316, together with our partners including SUSE, where we’ll be showcasing how much of the progress made in compute, storage, and networking, as well as distributed data processing frameworks can help to address these challenges.

1) The Infrastructure evolution

Compute demands are growing in direct response to data growth. More powerful servers or, more servers working in parallel – aka scale-out – are needed.

Deep learning techniques for example can absorb an insatiable amount of data, making a robust HDFS cluster a great way to achieve scale out storage for the collection and preparation of the data. Machine learning algorithms can run on traditional x86 CPUs, but GPUs can accelerate these algorithms by up to a factor of 100.

New approaches to data analytics applications and storage are also needed because the majority of the data available is unstructured. Email, text documents, images, audio, and video are data types that are a poor fit for relational databases and traditional storage methods.

Storing data in the public cloud can ease the load. But as your data grows and you need to access it more frequently, cloud services can become expensive, while the sovereignty of that data can be a concern.

Software-defined storage is a server virtualisation technology that allows you to shift large amounts of unstructured data to cost-effective, flexible solutions located on-premises. This assures performance and data sovereignty while reducing storage costs over time.

You can use platforms such as Hadoop to create shared repositories of unstructured data known as data lakes. Running on a cluster of servers, data lakes can be accessed by all users. However, they must be managed in a way that’s compliant, using enterprise-class data management platforms that allow you to store, protect and access data quickly and easily.

2) Need for speed

The pace of data analytics innovation continues to increase. Previously, you would define your data structures and build an application to operate on the data. The lifetime of such applications was measured in years.

Today, raw data is collected and explored for meaningful patterns using applications that are rebuilt when new patterns emerge. The lifetime of these applications is measured in months – and even days.

The value of data can also be short-lived. There’s a need to analyse it at source, as it arrives, in real time. Data solutions that employ in-memory processing for example, give your users immediate, drill-down access to all the data across your enterprise applications, data warehouses and data lakes.

3) Come see us at Strata Data Conference

Ultimately, your ability to innovate at speed with security and governance assured comes down to your IT infrastructure.

Cisco UCS is a trusted computing platform proven to deliver lower TCO and optimum performance and capacity for data-intensive workloads.

85% of Fortune 500 companies and more than 60,000 organisations globally rely on our validated solutions. These combine our servers with software from a broad ecosystem of partners to simplify the task of pooling IT resources and storing data across systems.

Crucially, they come with role- and policy-based management, which means you can configure hundreds of storage servers as easily as you can configure one, making scale-out a breeze as your data analytics projects mature.

If you’re looking to transform your business and turn your data into insights faster, there’s plenty of reasons to come visit us on booth 316:

4) Accelerated Analytics

If your data lake is deep and your data scientists are struggling to making sense of what lies beneath, then our MapD demo powered by data from mobile masts will show you how to cut through the depths and find the enlightenment you seek fast.

5) Deep learning with Cloudera Data Science Workbench

For those with a Hadoop cluster to manage their data lakes and deep learning framework, we’ll be demonstrating how to accelerate the training of deep learning modules with Cisco UCS C240 and C480 servers equipped with 2 and 6 GPUs respectively. We’ll also show you how to support growing cluster sizes using cloud-managed service profiles rather than more manpower.

6) Get with the Cisco Gateway

If you’re already a customer and fancy winning some shiny new tech, why not step through the Gateway to grow your reputation as a thought leader and showcase the success you’ve had?

7) Find your digital twin

To effectively create a digital twin of the enterprise, data scientists have to incorporate data sources inside and outside of the data centre for a holistic 360-view. Come join our resident expert Han Yang for his session on how we’re benefiting from big data and analytics, as well as helping our customers to incorporate data sources from Internet of Things and deploy machine learning at the edge and at the enterprise.

8) Get the scoop with SUSE

We’re set to unveil a new integration of SUSE Linux Enterprise Server and Cisco UCS. There’ll be SUSE specialists on our booth, so you can be the first to find out more about what’s in the pipeline.

Go here to find out more and bring your people and data together.

Click to read Keep Pace in the Data Economy

Tags: